1. Centos7配置软RAID 1

准备两块新硬盘,最好是同容量同转速的,新加硬盘必需重新启动系统才能读取:

- 列出所有磁盘: [root@linux-node1 ~]# fdisk -l

- [root@linux-node1 ~]# fdisk /dev/sdb

- 新建分区/dev/sdb1,并更改ID为fd(Linux raid autodetect类型),按w退出并保存

- [root@linux-node1 ~]# fdisk /dev/sdc

- 新建分区/dev/sdc1,并更改ID为fdLinux raid autodetect类型),按w退出并保存

- 使用partprobe /dev/sdb,partprobe /dev/sdc命令让linux内核重新读取硬盘参数

- 使用mdadm -C /dev/md0 -l 1 -a yes -n 2 /dev/sdb1 /dev/sdc1命令新建一个md0 软RAID

- mkfs -t ext4 /dev/md0格式化RAID分区

- mkdir /raid && mount /dev/md0 /raid 新建目录并挂载分区

- vim /etc/fstab 增加开机启动设备

- /dev/md0 /raid ext4 defaults 0 0

- mount -a 检查开机启动设备文件是否无误

2. ubuntu 18.04.5

2.1 小插曲

- 在制作软RAID之前,安装ubuntu18.04.5安装了两次,第一次安装在500G的 /dev/sda机械硬盘{安装一半中止安装}

- 第二次安装在240G的 /dev/sdb固态硬盘,最终以固态硬盘为系统盘启动

- 在系统中查看到根目录下挂载了LV,但是通过lvdisplay查看到有两块盘都挂载在/目录下,但有一个是活动,一个未活动,想删除未活动的,没有命令,最后重启后生效,以下是过程

root@ubuntu-18:/etc/apt# pvdisplay

--- Physical volume ---

PV Name /dev/sdb5

VG Name ubuntu-18-vg

PV Size <222.62 GiB / not usable 2.00 MiB

Allocatable yes (but full)

PE Size 4.00 MiB

Total PE 56989

Free PE 0

Allocated PE 56989

PV UUID idWKzJ-wlWM-W1TV-4dqs-dbac-5K2v-C2kbSW

--- Physical volume ---

PV Name /dev/sda5

VG Name ubuntu-18-vg

PV Size <464.81 GiB / not usable 0

Allocatable yes (but full)

PE Size 4.00 MiB

Total PE 118990

Free PE 0

Allocated PE 118990

PV UUID r0apGr-033N-9Eo2-r6he-rlIy-fepN-hmXdBF

root@ubuntu-18:/etc/apt# vgdisplay

--- Volume group ---

VG Name ubuntu-18-vg

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 2

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 1

Open LV 1

Max PV 0

Cur PV 1

Act PV 1

VG Size 222.61 GiB

PE Size 4.00 MiB

Total PE 56989

Alloc PE / Size 56989 / 222.61 GiB

Free PE / Size 0 / 0

VG UUID jC6Pgy-fjgQ-yP8m-ZSGI-XGrb-VN7W-JF1CHR

--- Volume group ---

VG Name ubuntu-18-vg

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 2

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 1

Open LV 0

Max PV 0

Cur PV 1

Act PV 1

VG Size 464.80 GiB

PE Size 4.00 MiB

Total PE 118990

Alloc PE / Size 118990 / 464.80 GiB

Free PE / Size 0 / 0

VG UUID A9eqdH-5erA-oSje-eg4I-PJu0-Y6XP-SJhfSj

root@ubuntu-18:/etc/apt# lvdisplay

--- Logical volume ---

LV Path /dev/ubuntu-18-vg/root

LV Name root

VG Name ubuntu-18-vg

LV UUID hQdPeT-9evX-ncOs-xoId-w1Az-XMsA-JXAbWE

LV Write Access read/write

LV Creation host, time ubuntu-18, 2022-11-24 21:58:25 +0800

LV Status available

# open 1

LV Size 222.61 GiB

Current LE 56989

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:0

--- Logical volume ---

LV Path /dev/ubuntu-18-vg/root

LV Name root

VG Name ubuntu-18-vg

LV UUID ifOmAN-zW2N-KrIp-zZDH-R5ST-7RIQ-HTn97u

LV Write Access read/write

LV Creation host, time ubuntu-18, 2022-11-24 21:50:11 +0800

LV Status NOT available #未活动,想删除掉

LV Size 464.80 GiB

Current LE 118990

Segments 1

Allocation inherit

Read ahead sectors auto

root@ubuntu-18:/etc/apt# df -TH

Filesystem Type Size Used Avail Use% Mounted on

udev devtmpfs 4.2G 0 4.2G 0% /dev

tmpfs tmpfs 827M 914k 826M 1% /run

/dev/mapper/ubuntu--18--vg-root xfs 239G 4.3G 235G 2% / #实际只挂载了ssd硬盘

tmpfs tmpfs 4.2G 0 4.2G 0% /dev/shm

tmpfs tmpfs 5.3M 0 5.3M 0% /run/lock

tmpfs tmpfs 4.2G 0 4.2G 0% /sys/fs/cgroup

/dev/sdb1 ext4 991M 64M 859M 7% /boot

tmpfs tmpfs 827M 0 827M 0% /run/user/0

root@ubuntu-18:/etc/apt# mkfs --type xfs -f /dev/sda #格式化sda硬盘

root@ubuntu-18:/etc/apt# mkfs --type xfs -f /dev/sdc #格式化sda硬盘

root@ubuntu-18:/etc/apt# lvdisplay

--- Logical volume ---

LV Path /dev/ubuntu-18-vg/root

LV Name root

VG Name ubuntu-18-vg

LV UUID hQdPeT-9evX-ncOs-xoId-w1Az-XMsA-JXAbWE

LV Write Access read/write

LV Creation host, time ubuntu-18, 2022-11-24 21:58:25 +0800

LV Status available

# open 1

LV Size 222.61 GiB

Current LE 56989

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:0

WARNING: Device for PV r0apGr-033N-9Eo2-r6he-rlIy-fepN-hmXdBF not found or rejected by a filter. #提示/dev/sda这个PV是没有用的

--- Logical volume ---

LV Path /dev/ubuntu-18-vg/root

LV Name root

VG Name ubuntu-18-vg

LV UUID ifOmAN-zW2N-KrIp-zZDH-R5ST-7RIQ-HTn97u

LV Write Access read/write

LV Creation host, time ubuntu-18, 2022-11-24 21:50:11 +0800

LV Status NOT available

LV Size 464.80 GiB

Current LE 118990

Segments 1

Allocation inherit

Read ahead sectors auto

注:最后重启后生效,系统自动会清除没有用的lv,vg,pv

2.2 制作软RAID 1

# 安装软raid工具

root@office:~# apt install -y mdadm

# 列出所有块设备

root@office:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 465.8G 0 disk

sdb 8:16 0 223.6G 0 disk

|-sdb1 8:17 0 976M 0 part /boot

|-sdb2 8:18 0 1K 0 part

`-sdb5 8:21 0 222.6G 0 part

`-ubuntu--18--vg-root 253:0 0 222.6G 0 lvm /

sdc 8:32 0 465.8G 0 disk

# sudo mdadm --create --verbose /dev/md1 --level=1 --raid-devices=2 /dev/sda /dev/sdc #software raid 1

# sudo mdadm --create --verbose /dev/md5 --level=5 --raid-devices=3 /dev/sda /dev/sdc /dev/sdd #software raid 5

# sudo mdadm --create --verbose /dev/md6 --level=6 --raid-devices=4 /dev/sda /dev/sdc /dev/sdd /dev/sde #software raid 6

# 创建软raid1

root@office:~# sudo mdadm --create --verbose /dev/md0 --level=1 --raid-devices=2 /dev/sda /dev/sdc

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

mdadm: size set to 488254464K

mdadm: automatically enabling write-intent bitmap on large array

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md0 started.

# 查看软raid信息

root@office:~# cat /proc/mdstat

Personalities : [raid1]

md0 : active raid1 sdc[1] sda[0]

488254464 blocks super 1.2 [2/2] [UU]

[>....................] resync = 0.1% (653120/488254464) finish=62.2min speed=130624K/sec #同步0.1%

bitmap: 4/4 pages [16KB], 65536KB chunk

unused devices: <none>

root@office:~# cat /proc/mdstat

Personalities : [raid1]

md0 : active raid1 sdc[1] sda[0]

488254464 blocks super 1.2 [2/2] [UU]

[>....................] resync = 3.0% (15125888/488254464) finish=59.5min speed=132494K/sec #同步3%

bitmap: 4/4 pages [16KB], 65536KB chunk

unused devices: <none>

# 查看所有块设备信息

root@office:~# fdisk -l

Disk /dev/sda: 465.8 GiB, 500107862016 bytes, 976773168 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdb: 223.6 GiB, 240057409536 bytes, 468862128 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xcb1878ad

Device Boot Start End Sectors Size Id Type

/dev/sdb1 * 2048 2000895 1998848 976M 83 Linux

/dev/sdb2 2002942 468860927 466857986 222.6G 5 Extended

/dev/sdb5 2002944 468860927 466857984 222.6G 8e Linux LVM

Disk /dev/sdc: 465.8 GiB, 500107862016 bytes, 976773168 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/ubuntu--18--vg-root: 222.6 GiB, 239029190656 bytes, 466853888 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/md0: 465.7 GiB, 499972571136 bytes, 976508928 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

---

# 格式化块设备为xfs文件系统

root@office:~# mkfs --type xfs -f /dev/md0

meta-data=/dev/md0 isize=512 agcount=4, agsize=30515904 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=0, rmapbt=0, reflink=0

data = bsize=4096 blocks=122063616, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=59601, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

# 挂载存储目录

root@office:~# mkdir -p /data

root@office:~# mount /dev/md0 /data/

root@office:~# df -TH

Filesystem Type Size Used Avail Use% Mounted on

udev devtmpfs 4.2G 0 4.2G 0% /dev

tmpfs tmpfs 827M 898k 826M 1% /run

/dev/mapper/ubuntu--18--vg-root xfs 239G 4.3G 235G 2% /

tmpfs tmpfs 4.2G 0 4.2G 0% /dev/shm

tmpfs tmpfs 5.3M 0 5.3M 0% /run/lock

tmpfs tmpfs 4.2G 0 4.2G 0% /sys/fs/cgroup

/dev/sdb1 ext4 991M 65M 859M 7% /boot

tmpfs tmpfs 827M 0 827M 0% /run/user/0

/dev/md0 xfs 500G 532M 500G 1% /data

# 保存数组布局

为了确保在引导时自动重新组装阵列,我们将不得不调整/etc/mdadm/mdadm.conf文件。您可以通过键入以下内容自动扫描活动数组并附加文件:

root@office:~# sudo mdadm --detail --scan | sudo tee -a /etc/mdadm/mdadm.conf

ARRAY /dev/md0 metadata=1.2 name=office.hs.com:0 UUID=8c669a34:60d603ea:2ac3604a:2b191b74

root@office:~# cat /etc/mdadm/mdadm.conf

HOMEHOST <system>

MAILADDR root

ARRAY /dev/md0 metadata=1.2 name=office.hs.com:0 UUID=8c669a34:60d603ea:2ac3604a:2b191b74

# 更新initramfs或初始RAM文件系统,以便在早期启动过程中阵列可用:

root@office:~# sudo update-initramfs -u

update-initramfs: Generating /boot/initrd.img-4.15.0-112-generic

----------

# 如果启动系统后,原来的名称/dev/md1变成/dev/md127了,可如下操作:

mdadm --assemble /dev/md1 --name=1 --update-name /dev/sda1 /dev/sdb1

# 如果原来的名称/dev/md2变成/dev/md127了,可如下操作:

mdadm --assemble /dev/md2 --name=2 --update-name /dev/sda1 /dev/sdb1

# 最后运行

sudo update-initramfs -u

----------

# 将新的文件系统挂载选项添加到/etc/fstab文件中以便在引导时自动挂载:

root@office:~# echo '/dev/md0 /data xfs defaults 0 0' | sudo tee -a /etc/fstab

/dev/md0 /data xfs defaults 0 0

# 测试是否自动开机挂载

root@office:~# umount /data

root@office:~# mount -a

root@office:~# df -TH

Filesystem Type Size Used Avail Use% Mounted on

udev devtmpfs 4.2G 0 4.2G 0% /dev

tmpfs tmpfs 827M 898k 826M 1% /run

/dev/mapper/ubuntu--18--vg-root xfs 239G 4.3G 235G 2% /

tmpfs tmpfs 4.2G 0 4.2G 0% /dev/shm

tmpfs tmpfs 5.3M 0 5.3M 0% /run/lock

tmpfs tmpfs 4.2G 0 4.2G 0% /sys/fs/cgroup

/dev/sdb1 ext4 991M 65M 859M 7% /boot

tmpfs tmpfs 827M 0 827M 0% /run/user/0

/dev/md0 xfs 500G 532M 500G 1% /data

# 重启查看是否正常

root@office:~# cat /proc/mdstat

Personalities : [raid1]

md0 : active raid1 sdc[1] sda[0]

488254464 blocks super 1.2 [2/2] [UU]

[======>..............] resync = 31.3% (152994432/488254464) finish=48.2min speed=115736K/sec

bitmap: 3/4 pages [12KB], 65536KB chunk

unused devices: <none>

root@office:~# reboot

# 重启后状态,如预期正常

root@office:~# df -TH

Filesystem Type Size Used Avail Use% Mounted on

udev devtmpfs 4.2G 0 4.2G 0% /dev

tmpfs tmpfs 827M 914k 826M 1% /run

/dev/mapper/ubuntu--18--vg-root xfs 239G 4.3G 235G 2% /

tmpfs tmpfs 4.2G 0 4.2G 0% /dev/shm

tmpfs tmpfs 5.3M 0 5.3M 0% /run/lock

tmpfs tmpfs 4.2G 0 4.2G 0% /sys/fs/cgroup

/dev/sdb1 ext4 991M 65M 859M 7% /boot

/dev/md0 xfs 500G 532M 500G 1% /data

tmpfs tmpfs 827M 0 827M 0% /run/user/0

root@office:~# cat /proc/mdstat

Personalities : [raid1] [linear] [multipath] [raid0] [raid6] [raid5] [raid4] [raid10]

md0 : active raid1 sda[0] sdc[1]

488254464 blocks super 1.2 [2/2] [UU]

[======>..............] resync = 32.0% (156609536/488254464) finish=47.3min speed=116771K/sec

bitmap: 3/4 pages [12KB], 65536KB chunk

unused devices: <none>

---

# 同步完成状态

root@office:~# cat /proc/mdstat

Personalities : [raid1] [linear] [multipath] [raid0] [raid6] [raid5] [raid4] [raid10]

md0 : active raid1 sda[0] sdc[1]

488254464 blocks super 1.2 [2/2] [UU]

bitmap: 0/4 pages [0KB], 65536KB chunk

unused devices: <none>

2.3 创建LVM on 软RAID

# 在软RAID之上创建LVM分区

root@office:~# fdisk -l /dev/md0

Disk /dev/md0: 465.7 GiB, 499972571136 bytes, 976508928 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x3637f591

Device Boot Start End Sectors Size Id Type

/dev/md0p1 2048 976508927 976506880 465.7G 8e Linux LVM

# 创建PV

root@office:~# pvcreate /dev/md0p1

Physical volume "/dev/md0p1" successfully created.

root@office:~# pvdisplay

--- Physical volume ---

PV Name /dev/md0p1

VG Name data

PV Size 465.63 GiB / not usable 2.00 MiB

Allocatable yes

PE Size 4.00 MiB

Total PE 119202

Free PE 119202

Allocated PE 0

PV UUID bN1emA-z0g0-uwoR-gm4X-Hj3h-jIEY-siJeL4

--- Physical volume ---

PV Name /dev/sdb5

VG Name ubuntu-18-vg

PV Size <222.62 GiB / not usable 2.00 MiB

Allocatable yes (but full)

PE Size 4.00 MiB

Total PE 56989

Free PE 0

Allocated PE 56989

PV UUID idWKzJ-wlWM-W1TV-4dqs-dbac-5K2v-C2kbSW

# 创建VG

root@office:~# vgcreate data /dev/md0p1

Volume group "data" successfully created

root@office:~# vgdisplay

--- Volume group ---

VG Name data

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 1

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 0

Open LV 0

Max PV 0

Cur PV 1

Act PV 1

VG Size 465.63 GiB

PE Size 4.00 MiB

Total PE 119202

Alloc PE / Size 0 / 0

Free PE / Size 119202 / 465.63 GiB

VG UUID 53eZFT-NBTs-n7ZE-YqPH-P9r5-vFZU-A0pX66

--- Volume group ---

VG Name ubuntu-18-vg

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 2

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 1

Open LV 1

Max PV 0

Cur PV 1

Act PV 1

VG Size 222.61 GiB

PE Size 4.00 MiB

Total PE 56989

Alloc PE / Size 56989 / 222.61 GiB

Free PE / Size 0 / 0

VG UUID jC6Pgy-fjgQ-yP8m-ZSGI-XGrb-VN7W-JF1CHR

# 创建LV

root@office:~# lvcreate -l 100%FREE -n data-lv data

Logical volume "data-lv" created.

root@office:~# lvdisplay

--- Logical volume ---

LV Path /dev/data/data-lv

LV Name data-lv

VG Name data

LV UUID 8sWufC-pYOZ-URbg-pZyb-07O4-BBGc-GMgdB8

LV Write Access read/write

LV Creation host, time office.hs.com, 2022-11-24 17:28:01 +0800

LV Status available

# open 0

LV Size 465.63 GiB

Current LE 119202

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:1

--- Logical volume ---

LV Path /dev/ubuntu-18-vg/root

LV Name root

VG Name ubuntu-18-vg

LV UUID hQdPeT-9evX-ncOs-xoId-w1Az-XMsA-JXAbWE

LV Write Access read/write

LV Creation host, time ubuntu-18, 2022-11-24 21:58:25 +0800

LV Status available

# open 1

LV Size 222.61 GiB

Current LE 56989

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:0

注:如果扩展大小,需要执行:xfs_growfs /dev/vg1/lv1增加文件系统的容量

# 格式化LV为xfs文件系统

root@office:~# mkfs -t xfs /dev/data/data-lv

meta-data=/dev/data/data-lv isize=512 agcount=4, agsize=30515712 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=0, rmapbt=0, reflink=0

data = bsize=4096 blocks=122062848, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=59601, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

root@office:~# fdisk -l /dev/data/data-lv

Disk /dev/data/data-lv: 465.6 GiB, 499969425408 bytes, 976502784 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

# 挂载存储目录

root@office:~# mount /dev/data/data-lv /data/

root@office:~# cat /etc/fstab

# <file system> <mount point> <type> <options> <dump> <pass>

/dev/mapper/ubuntu--18--vg-root / xfs defaults 0 0

# /boot was on /dev/sda1 during installation

UUID=d9974eb6-44d5-4ba2-a1d6-e0ae846aea5c /boot ext4 defaults 0 2

/swapfile none swap sw 0 0

/dev/data/data-lv /data xfs defaults 0 0

---

root@office:~# df -TH

Filesystem Type Size Used Avail Use% Mounted on

udev devtmpfs 4.2G 0 4.2G 0% /dev

tmpfs tmpfs 827M 926k 826M 1% /run

/dev/mapper/ubuntu--18--vg-root xfs 239G 4.3G 235G 2% /

tmpfs tmpfs 4.2G 0 4.2G 0% /dev/shm

tmpfs tmpfs 5.3M 0 5.3M 0% /run/lock

tmpfs tmpfs 4.2G 0 4.2G 0% /sys/fs/cgroup

/dev/sdb1 ext4 991M 65M 859M 7% /boot

tmpfs tmpfs 827M 0 827M 0% /run/user/0

/dev/mapper/data-data--lv xfs 500G 532M 500G 1% /data

root@office:~# umount /data

root@office:~# mount -a

droot@office:~# df -TH

Filesystem Type Size Used Avail Use% Mounted on

udev devtmpfs 4.2G 0 4.2G 0% /dev

tmpfs tmpfs 827M 926k 826M 1% /run

/dev/mapper/ubuntu--18--vg-root xfs 239G 4.3G 235G 2% /

tmpfs tmpfs 4.2G 0 4.2G 0% /dev/shm

tmpfs tmpfs 5.3M 0 5.3M 0% /run/lock

tmpfs tmpfs 4.2G 0 4.2G 0% /sys/fs/cgroup

/dev/sdb1 ext4 991M 65M 859M 7% /boot

tmpfs tmpfs 827M 0 827M 0% /run/user/0

/dev/mapper/data-data--lv xfs 500G 532M 500G 1% /data

3. 软RAID

datetime: 20240508 OS: CentOS7

3.1 创建软RAID1

# 创建分区/dev/sda1, /dev/sdb1

[root@testhoteles ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 465.8G 0 disk

└─sda1 8:1 0 465.8G 0 part

sdb 8:16 0 465.8G 0 disk

└─sdb1 8:17 0 465.8G 0 part

sdc 8:32 0 223.6G 0 disk

├─sdc1 8:33 0 500M 0 part /boot

└─sdc2 8:34 0 223.1G 0 part

├─centos-root 253:0 0 50G 0 lvm /

├─centos-swap 253:1 0 7.8G 0 lvm [SWAP]

└─centos-home 253:2 0 165.2G 0 lvm /home

# 创建软RAID1

[root@testhoteles ~]# yum install mdadm -y

[root@testhoteles ~]# mdadm --create --verbose /dev/md1 --level=1 --raid-devices=2 /dev/sda1 /dev/sdb1

mdadm: partition table exists on /dev/sda1

mdadm: partition table exists on /dev/sda1 but will be lost or

meaningless after creating array

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

mdadm: partition table exists on /dev/sdb1

mdadm: partition table exists on /dev/sdb1 but will be lost or

meaningless after creating array

mdadm: size set to 488253440K

mdadm: automatically enabling write-intent bitmap on large array

Continue creating array? y # 确认

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md1 started.

# 查看软RAID详细信息

[root@testhoteles ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Wed May 8 06:11:58 2024

Raid Level : raid1

Array Size : 488253440 (465.63 GiB 499.97 GB)

Used Dev Size : 488253440 (465.63 GiB 499.97 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Intent Bitmap : Internal

Update Time : Wed May 8 06:12:43 2024

State : clean, resyncing

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : bitmap

Resync Status : 1% complete

Name : testhoteles.hs.com:1 (local to host testhoteles.hs.com)

UUID : 611ac412:b50551be:6cf89141:7d2d4580

Events : 9

Number Major Minor RaidDevice State

0 8 1 0 active sync /dev/sda1

1 8 17 1 active sync /dev/sdb1

# 查看已经组装(激活)的阵列状态

[root@testhoteles ~]# cat /proc/mdstat

Personalities : [raid1]

md1 : active raid1 sdb1[1] sda1[0]

488253440 blocks super 1.2 [2/2] [UU]

[>....................] resync = 1.8% (9164160/488253440) finish=66.3min speed=120344K/sec

bitmap: 4/4 pages [16KB], 65536KB chunk

unused devices: <none>

# 查看陈列配置

[root@testhoteles ~]# mdadm -Q /dev/md1

/dev/md1: 465.63GiB raid1 2 devices, 0 spares. Use mdadm --detail for more detail.

[root@testhoteles ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 465.8G 0 disk

└─sda1 8:1 0 465.8G 0 part

└─md1 9:1 0 465.7G 0 raid1

sdb 8:16 0 465.8G 0 disk

└─sdb1 8:17 0 465.8G 0 part

└─md1 9:1 0 465.7G 0 raid1

sdc 8:32 0 223.6G 0 disk

├─sdc1 8:33 0 500M 0 part /boot

└─sdc2 8:34 0 223.1G 0 part

├─centos-root 253:0 0 50G 0 lvm /

├─centos-swap 253:1 0 7.8G 0 lvm [SWAP]

└─centos-home 253:2 0 165.2G 0 lvm /home

# 查看阵列构成配置(转储超级块内容)

[root@testhoteles ~]# mdadm --query --examine /dev/sda1

/dev/sda1:

Magic : a92b4efc

Version : 1.2

Feature Map : 0x1

Array UUID : 611ac412:b50551be:6cf89141:7d2d4580

Name : testhoteles.hs.com:1 (local to host testhoteles.hs.com)

Creation Time : Wed May 8 06:11:58 2024

Raid Level : raid1

Raid Devices : 2

Avail Dev Size : 976506928 sectors (465.63 GiB 499.97 GB)

Array Size : 488253440 KiB (465.63 GiB 499.97 GB)

Used Dev Size : 976506880 sectors (465.63 GiB 499.97 GB)

Data Offset : 264192 sectors

Super Offset : 8 sectors

Unused Space : before=264112 sectors, after=48 sectors

State : active

Device UUID : 88720bbd:f63659bd:f432717e:8440d35c

Internal Bitmap : 8 sectors from superblock

Update Time : Wed May 8 06:33:01 2024

Bad Block Log : 512 entries available at offset 16 sectors

Checksum : 7c6f3018 - correct

Events : 225

Device Role : Active device 0

Array State : AA ('A' == active, '.' == missing, 'R' == replacing)

# 同步完成状态

[root@testhoteles ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Wed May 8 06:11:58 2024

Raid Level : raid1

Array Size : 488253440 (465.63 GiB 499.97 GB)

Used Dev Size : 488253440 (465.63 GiB 499.97 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Intent Bitmap : Internal

Update Time : Wed May 8 15:35:00 2024

State : clean # 这里没有resyncing状态了,表示干净状态

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : bitmap

Name : testhoteles.hs.com:1 (local to host testhoteles.hs.com)

UUID : 611ac412:b50551be:6cf89141:7d2d4580

Events : 1127

Number Major Minor RaidDevice State

0 8 1 0 active sync /dev/sda1

1 8 17 1 active sync /dev/sdb1

3.2 raid停止和重组装

[root@testhoteles ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 465.8G 0 disk

└─sda1 8:1 0 465.8G 0 part

└─md1 9:1 0 465.7G 0 raid1

sdb 8:16 0 465.8G 0 disk

└─sdb1 8:17 0 465.8G 0 part

└─md1 9:1 0 465.7G 0 raid1

sdc 8:32 0 223.6G 0 disk

├─sdc1 8:33 0 500M 0 part /boot

└─sdc2 8:34 0 223.1G 0 part

├─centos-root 253:0 0 50G 0 lvm /

├─centos-swap 253:1 0 7.8G 0 lvm [SWAP]

└─centos-home 253:2 0 165.2G 0 lvm /home

# 停止软RAID

[root@testhoteles ~]# mdadm --stop /dev/md1

mdadm: stopped /dev/md1

# 查看块

[root@testhoteles ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 465.8G 0 disk

└─sda1 8:1 0 465.8G 0 part

sdb 8:16 0 465.8G 0 disk

└─sdb1 8:17 0 465.8G 0 part

sdc 8:32 0 223.6G 0 disk

├─sdc1 8:33 0 500M 0 part /boot

└─sdc2 8:34 0 223.1G 0 part

├─centos-root 253:0 0 50G 0 lvm /

├─centos-swap 253:1 0 7.8G 0 lvm [SWAP]

└─centos-home 253:2 0 165.2G 0 lvm /home

# 重新装载陈列

# mdadm --assemble --scan # 自动组装所有阵列

[root@testhoteles ~]# mdadm --assemble /dev/md1 /dev/sda1 /dev/sdb1 --force

mdadm: /dev/md1 has been started with 2 drives.

[root@testhoteles ~]#

mdadm: /dev/md/1 has been started with 2 drives.

[root@testhoteles ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Wed May 8 06:11:58 2024

Raid Level : raid1

Array Size : 488253440 (465.63 GiB 499.97 GB)

Used Dev Size : 488253440 (465.63 GiB 499.97 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Intent Bitmap : Internal

Update Time : Wed May 8 06:28:39 2024

State : clean, resyncing

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : bitmap

Resync Status : 20% complete

Name : testhoteles.hs.com:1 (local to host testhoteles.hs.com)

UUID : 611ac412:b50551be:6cf89141:7d2d4580

Events : 172

Number Major Minor RaidDevice State

0 8 1 0 active sync /dev/sda1

1 8 17 1 active sync /dev/sdb1

3.3 保存已有的陈列配置

[root@testhoteles ~]# mdadm --detail --scan > /etc/mdadm.conf

[root@testhoteles ~]# cat /etc/mdadm.conf

ARRAY /dev/md1 metadata=1.2 name=testhoteles.hs.com:1 UUID=611ac412:b50551be:6cf89141:7d2d4580

3.4 挂载并开机启动

[root@testhoteles ~]# mkfs -t xfs /dev/md1

[root@testhoteles ~]# mkdir /data

[root@testhoteles ~]# cat /etc/fstab | grep /data

/dev/md1 /data xfs defaults 0 0

[root@testhoteles ~]# mount -a

[root@testhoteles ~]# df -TH | grep /data

/dev/md1 xfs 500G 34M 500G 1% /data

# CentOS7更新initramfs,避免raid名称变成md127,在对raid有更新的情况下,都需要更新一下initramfs的,以保证在启动时能正确的找到raid信息

[root@testhoteles ~]# dracut --mdadmconf --fstab --add="mdraid" --filesystems "xfs ext4 ext3" --add-drivers="raid1" --force /boot/initramfs-$(uname -r).img $(uname -r) -M

# 检查是否配置生效

[root@syslog ~]# ls /boot/initramfs-$(uname -r).img

/boot/initramfs-3.10.0-1160.119.1.el7.x86_64.img

# 查看/boot/initramfs-3.10.0-1160.119.1.el7.x86_64.img镜像中所有文件,通过过滤条件进行查看

[root@syslog ~]# lsinitrd /boot/initramfs-$(uname -r).img | grep -E 'mdraid|raid1|xfs|ext4|ext3'

Arguments: --mdadmconf --fstab --add 'mdraid' --filesystems 'xfs ext4 ext3' --add-drivers 'raid1' --force -M

mdraid

-rwxr-xr-x 1 root root 265 Sep 12 2013 usr/lib/dracut/hooks/cleanup/99-mdraid-needshutdown.sh

-rwxr-xr-x 1 root root 910 Sep 12 2013 usr/lib/dracut/hooks/pre-mount/10-mdraid-waitclean.sh

-rw-r--r-- 1 root root 22232 Jun 4 2024 usr/lib/modules/3.10.0-1160.119.1.el7.x86_64/kernel/drivers/md/raid1.ko.xz

drwxr-xr-x 2 root root 0 Apr 9 13:57 usr/lib/modules/3.10.0-1160.119.1.el7.x86_64/kernel/fs/ext4

-rw-r--r-- 1 root root 224256 Jun 4 2024 usr/lib/modules/3.10.0-1160.119.1.el7.x86_64/kernel/fs/ext4/ext4.ko.xz

drwxr-xr-x 2 root root 0 Apr 9 13:57 usr/lib/modules/3.10.0-1160.119.1.el7.x86_64/kernel/fs/xfs

-rw-r--r-- 1 root root 348176 Jun 4 2024 usr/lib/modules/3.10.0-1160.119.1.el7.x86_64/kernel/fs/xfs/xfs.ko.xz

-rwxr-xr-x 3 root root 0 Apr 9 13:57 usr/sbin/fsck.ext3

-rwxr-xr-x 3 root root 256560 Apr 9 13:57 usr/sbin/fsck.ext4

-rwxr-xr-x 1 root root 433 Oct 1 2020 usr/sbin/fsck.xfs

-rwxr-xr-x 1 root root 708 Sep 12 2013 usr/sbin/mdraid-cleanup

-rwxr-xr-x 1 root root 1951 Sep 30 2020 usr/sbin/mdraid_start

-rwxr-xr-x 1 root root 590208 Apr 9 13:57 usr/sbin/xfs_db

-rwxr-xr-x 1 root root 747 Oct 1 2020 usr/sbin/xfs_metadump

-rwxr-xr-x 1 root root 576720 Apr 9 13:57 usr/sbin/xfs_repair

## 查看/boot/initramfs-3.10.0-1160.119.1.el7.x86_64.img镜像中的文件etc/mdadm.conf的内容

[root@syslog ~]# lsinitrd /boot/initramfs-$(uname -r).img -f etc/mdadm.conf

ARRAY /dev/md1 metadata=1.2 name=syslog:1 UUID=2b810998:9c4d6da4:cfac8aa3:2c176956

CentOS7更新initramfs可不做,因为后续测试重启服务器,也能成功挂载到/data目录下

3.5 模拟软RAID故障和修复

# 手动停掉一个设备(当设备显示磨损迹象时,例如通过SMART)

[root@testhoteles ~]# mdadm --manage /dev/md1 --fail /dev/sda1

mdadm: set /dev/sda1 faulty in /dev/md1

[root@testhoteles ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Wed May 8 06:11:58 2024

Raid Level : raid1

Array Size : 488253440 (465.63 GiB 499.97 GB)

Used Dev Size : 488253440 (465.63 GiB 499.97 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Intent Bitmap : Internal

Update Time : Wed May 8 15:39:18 2024

State : clean, degraded

Active Devices : 1

Working Devices : 1

Failed Devices : 1

Spare Devices : 0

Consistency Policy : bitmap

Name : testhoteles.hs.com:1 (local to host testhoteles.hs.com)

UUID : 611ac412:b50551be:6cf89141:7d2d4580

Events : 1129

Number Major Minor RaidDevice State

- 0 0 0 removed

1 8 17 1 active sync /dev/sdb1

0 8 1 - faulty /dev/sda1# 此时/dev/sda1是故障的,不影响使用

[root@testhoteles ~]# df -TH | grep /data

/dev/md1 xfs 500G 35M 500G 1% /data

# 移除一个错误的组员设备(在设备故障后,应提前更换设备)

[root@testhoteles ~]# mdadm --manage /dev/md1 --remove /dev/sda1

mdadm: hot removed /dev/sda1 from /dev/md1

[root@testhoteles ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Wed May 8 06:11:58 2024

Raid Level : raid1

Array Size : 488253440 (465.63 GiB 499.97 GB)

Used Dev Size : 488253440 (465.63 GiB 499.97 GB)

Raid Devices : 2

Total Devices : 1

Persistence : Superblock is persistent

Intent Bitmap : Internal

Update Time : Wed May 8 15:41:10 2024

State : clean, degraded

Active Devices : 1

Working Devices : 1

Failed Devices : 0

Spare Devices : 0

Consistency Policy : bitmap

Name : testhoteles.hs.com:1 (local to host testhoteles.hs.com)

UUID : 611ac412:b50551be:6cf89141:7d2d4580

Events : 1134

Number Major Minor RaidDevice State

- 0 0 0 removed # 已经移除

1 8 17 1 active sync /dev/sdb1

# 添加一个成员到现有阵列,这将开始重建修复,因为硬盘超级块信息未清除,而且是/dev/md1原来的成员磁盘,所以加入进来后不需要同步 或者 及少数同步就可使用

[root@testhoteles ~]# mdadm /dev/md1 --add /dev/sda1

mdadm: re-added /dev/sda1

[root@testhoteles ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Wed May 8 06:11:58 2024

Raid Level : raid1

Array Size : 488253440 (465.63 GiB 499.97 GB)

Used Dev Size : 488253440 (465.63 GiB 499.97 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Intent Bitmap : Internal

Update Time : Wed May 8 15:42:06 2024

State : clean # 状态是健康的

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : bitmap

Name : testhoteles.hs.com:1 (local to host testhoteles.hs.com)

UUID : 611ac412:b50551be:6cf89141:7d2d4580

Events : 1158

Number Major Minor RaidDevice State

0 8 1 0 active sync /dev/sda1

1 8 17 1 active sync /dev/sdb1

# 当有数据写入时,状态为active

[root@testhoteles ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Wed May 8 06:11:58 2024

Raid Level : raid1

Array Size : 488253440 (465.63 GiB 499.97 GB)

Used Dev Size : 488253440 (465.63 GiB 499.97 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Intent Bitmap : Internal

Update Time : Wed May 8 12:25:06 2024

State : active

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : bitmap

Name : testhoteles.hs.com:1 (local to host testhoteles.hs.com)

UUID : 611ac412:b50551be:6cf89141:7d2d4580

Events : 2291

Number Major Minor RaidDevice State

2 8 1 0 active sync /dev/sda1

1 8 17 1 active sync /dev/sdb1

3.6 扩容软RAID,RAID0和RAID5可以

mdadm /dev/md5 -a /dev/sde1

mdadm --grow /dev/md5 --raid-devices=4

xfs_growfs -d /mnt

3.7 清除软RAID超级块信息

将软RAID其中一个分区独立出来时,用于其他目的之前必须清除软RAID超级块信息,因为只有清除了此数据后,该分区才干净可用于挂载、或者当做其它软RAID成员使用。

# 清除硬盘超级块信息

[root@testhoteles ~]# mdadm --zero-superblock /dev/sda1

[root@testhoteles ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Wed May 8 06:11:58 2024

Raid Level : raid1

Array Size : 488253440 (465.63 GiB 499.97 GB)

Used Dev Size : 488253440 (465.63 GiB 499.97 GB)

Raid Devices : 2

Total Devices : 1

Persistence : Superblock is persistent

Intent Bitmap : Internal

Update Time : Wed May 8 15:43:16 2024

State : clean, degraded

Active Devices : 1

Working Devices : 1

Failed Devices : 0

Spare Devices : 0

Consistency Policy : bitmap

Name : testhoteles.hs.com:1 (local to host testhoteles.hs.com)

UUID : 611ac412:b50551be:6cf89141:7d2d4580

Events : 1163

Number Major Minor RaidDevice State

- 0 0 0 removed

1 8 17 1 active sync /dev/sdb1

# 加入清除硬盘超级块信息后的磁盘,将会使此块磁盘从新同步另一块磁盘,从0开始

[root@testhoteles ~]# mdadm /dev/md1 --add /dev/sda1

mdadm: added /dev/sda1

[root@testhoteles ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Wed May 8 06:11:58 2024

Raid Level : raid1

Array Size : 488253440 (465.63 GiB 499.97 GB)

Used Dev Size : 488253440 (465.63 GiB 499.97 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Intent Bitmap : Internal

Update Time : Wed May 8 15:43:47 2024

State : clean, degraded, recovering

Active Devices : 1

Working Devices : 2

Failed Devices : 0

Spare Devices : 1

Consistency Policy : bitmap

Rebuild Status : 0% complete

Name : testhoteles.hs.com:1 (local to host testhoteles.hs.com)

UUID : 611ac412:b50551be:6cf89141:7d2d4580

Events : 1167

Number Major Minor RaidDevice State

2 8 1 0 spare rebuilding /dev/sda1

1 8 17 1 active sync /dev/sdb1

3.8 软RAID故障告警

$ yum install -y mailx

$ vim /etc/mail.rc

set from=name@test.com

set smtp=smtp.qiye.163.com

set smtp-auth=login

set smtp-auth-user=name@test.com

set smtp-auth-password=password

set ssl-verify=ignore

$ cat /shell/send_mail.sh

#!/bin/bash

HOST=`/sbin/ip a s eth0 | /bin/grep -oP '(?<=inet\s)\d+(\.\d+){3}'`

echo "testhoteles($HOST) soft raid status is change, can fail!" | mail -s "softraid alert" name@test.com

$ vim /etc/rc.d/rc.local

# monitor mdadm

mdadm --monitor --daemonise --delay=60 --scan --program=/shell/send_mail.sh

3.9 软RAID相关故障

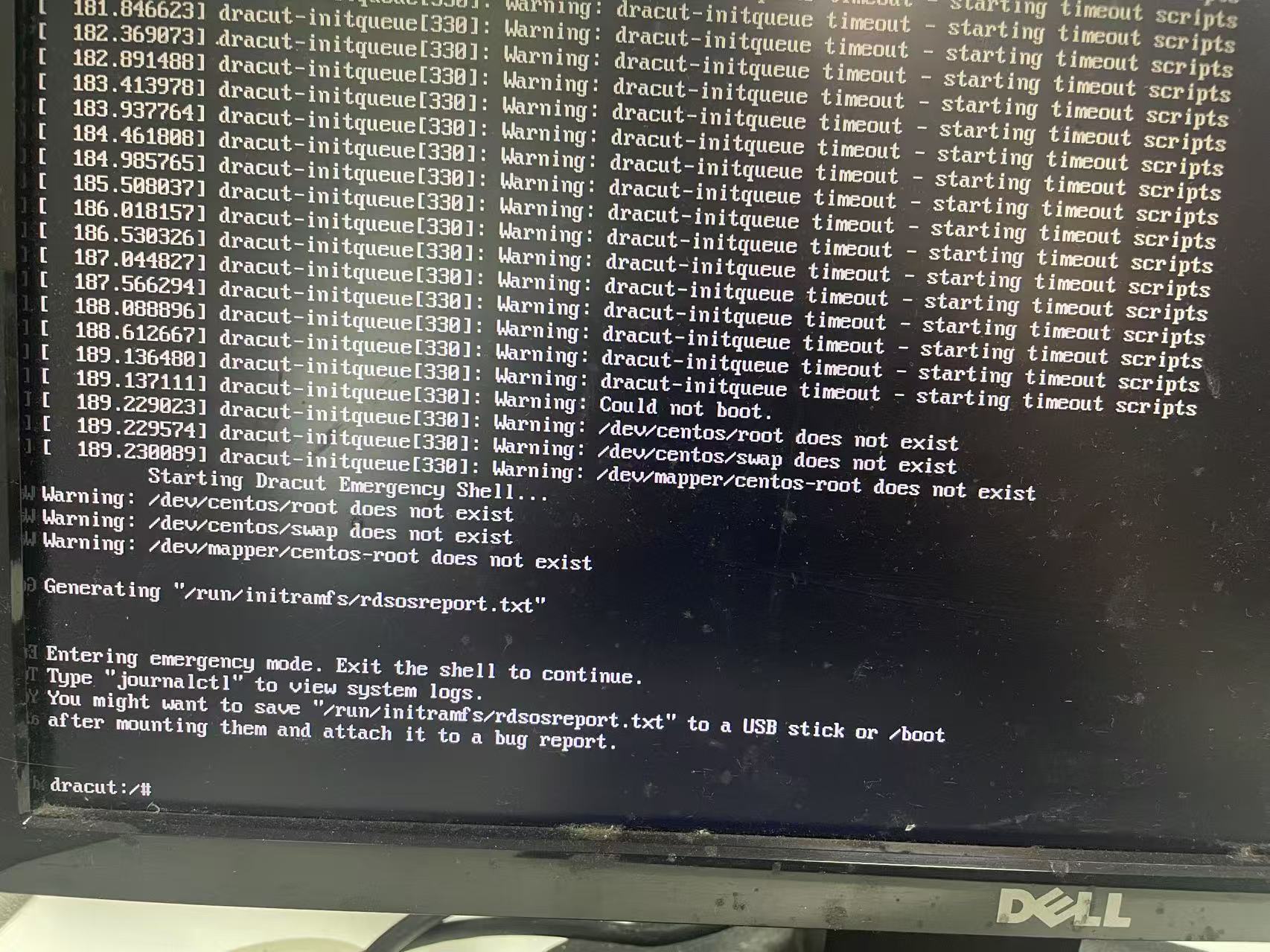

**事情起由:**公司停电,服务器未先关闭,所以服务器是突然关闭的,来电后,服务器起来不了

centos7 启动报错描述:

dracut-initqueue: Warning: dracut-initqueue timeout - starting timeout scripts;

dracut-initqueue: Warning: dracut-initqueue timeout - starting timeout scripts;

.....

dracut-initqueue: Warning: /dev/centos/root does not exist

dracut-initqueue: Warning: /dev/centos/swap does not exist

dracut-initqueue: Warning: /dev/mapper/centos-root does not exist

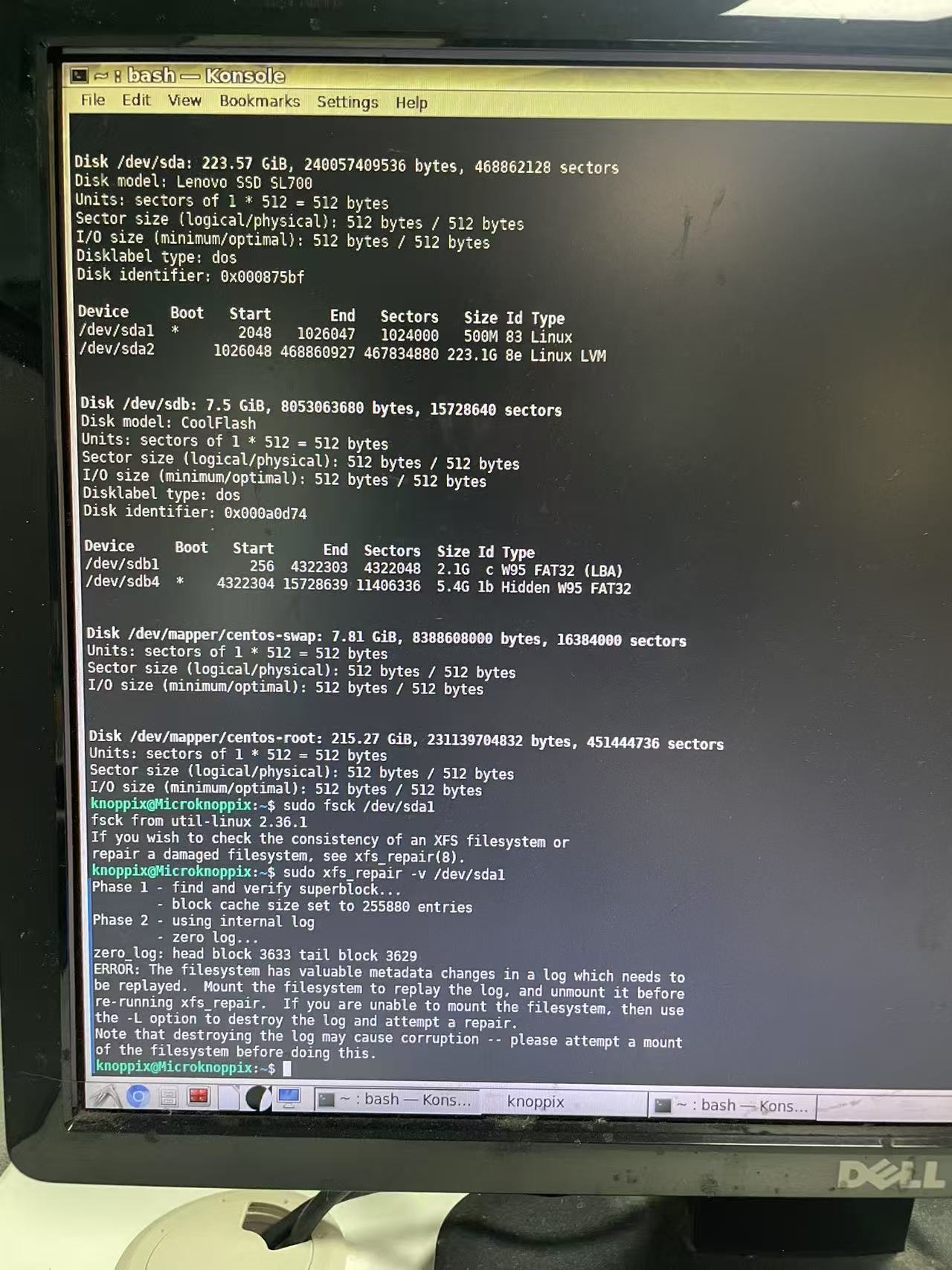

1. 通过LiveCD挂载系统盘操作–未采用此方式

# 挂载/

sudo mount /dev/mapper/centos-root /mnt

# 挂载/boot

sudo mount /dev/sda1 /mnt/boot

# 挂载当前LiveCD的目录 /dev /proc 到 /mnt之中,

sudo mount -o bind /dev /mnt/dev

sudo mount -t proc /proc /mnt/proc

# 更改/

sudo chroot /mnt

# 更改/后,只能进去修改和备份配置文件,对于修复/boot里面的操作会失败,例如执行以下命令会提示/proc里面的一些文件未找到,所以未成功,而故障原因正是在这里,因为在做RAID的时候为了让RAID的名称不变开机自动挂载,从而更改了initramfs-3.10.0-1160.119.1.el7.x86_64.img里面的参数,突然断电导致里面参数和软RAID不兼容了

dracut -f /boot/initramfs-3.10.0-1160.119.1.el7.x86_64.img 3.10.0-1160.119.1.el7.x86_64

# 不能修复,所以退出/

exit

# 在LiveCD系统上操作,对源系统分区文件系统进行修复

# 对`/`进行修复,命令执行成功

sudo xfs_repair -v /dev/mapper/centos-root

# 对`/boot`进行修复,命令执行失败

sudo fsck /dev/sda1 # 提示使用xfs-repair命令,应该不是ext文件系统

sudo xfs_repair -v /dev/sda1 # 提示文件系统发生了元数据变化,需要使用`sudo xfs_repair -vL /dev/sda1`命令,但是`-L`参数可能会导致文件系统损坏,有风险所以没有执行,实在没有办法可执行此命令试试

通过liveCD操作,得出结论:

- 在chroot中,无法对

/boot分区执行dracut等涉及内核的变更操作- 在chroot中,可以备份旧硬盘中的数据到备份位置中

- 在LiveCD命令行中,对

/boot分区执行修复报错,说明此分区有问题,需要加-L参数,但是-L参数可能会导致文件系统损坏,有风险所以没有执行,实在没有办法可执行此命令试试,其实哪个分区报错,往往说明这个分区有问题- 在LiveCD命令行中,对

/分区执行修复成功,说明无问题。

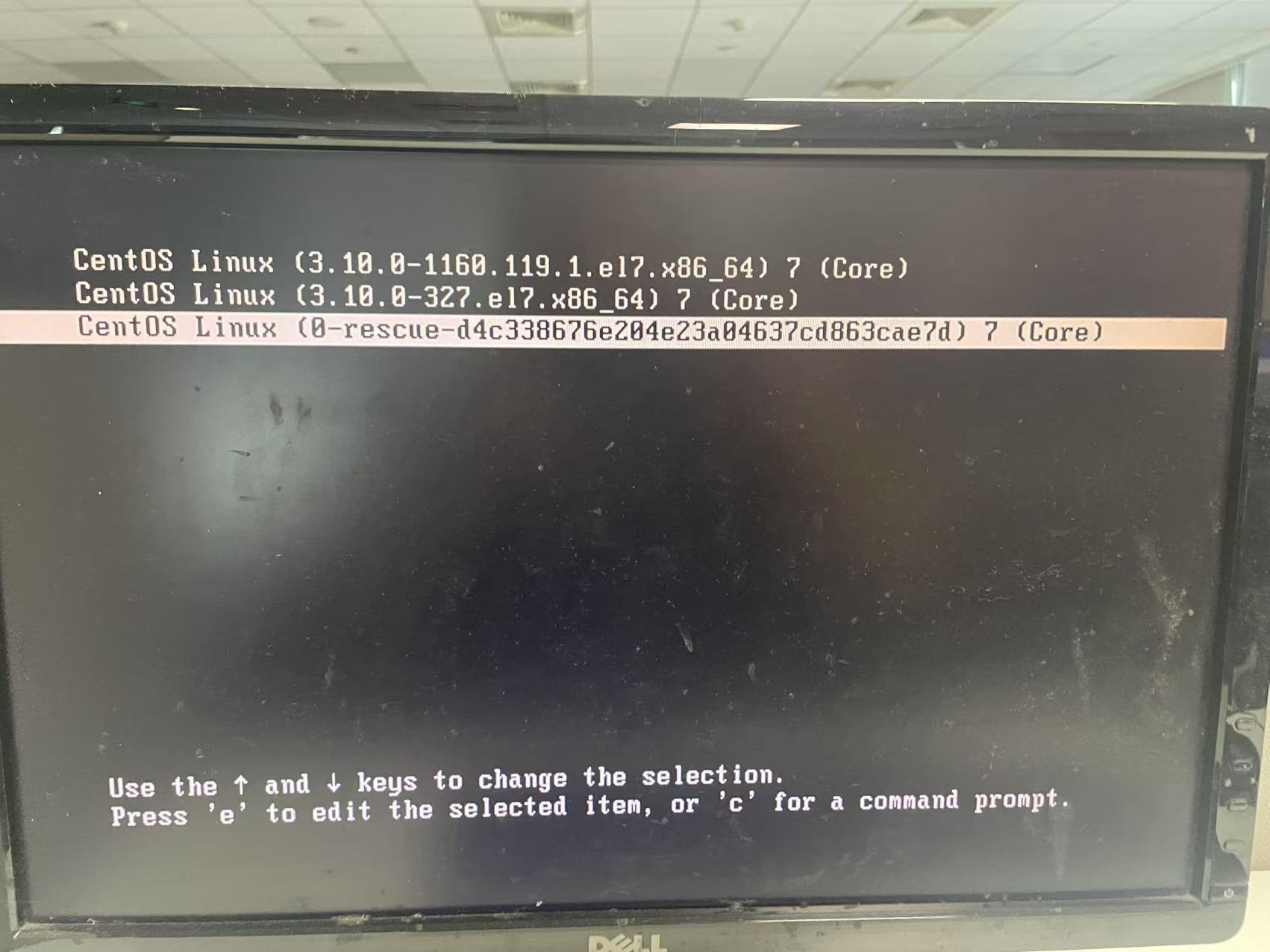

2.通过系统自带的救援模式操作–采用此方式

centos启动菜单描述:

CentOS Linux(3.10.8-1160.119.1.e17.x86 64)7 (Core)是默认启动的菜单,内核为1160,是系统更新后的内核版本CentOS Linux(3.10.8-327.e17.x86 64)7(Core)是旧的启动的菜单,内核为327,是系统更新前的内核版本Cent0S linux(-rescue-d4c338676e204e23a84637cd863cae7d)7(Core)是救援模式菜单,内核其实也为327,但是跟CentOS Linux(3.10.8-327.e17.x86 64)7(Core)有点不同,我们需要使用此模式来修复/boot分区

进入救援模式,执行命令对/boot分区进行修复

# 选择默认启动系统菜单对应的img,从/boor/grub2/grub.cfg中可以看出

[root@syslog ~]# dracut -f /boot/initramfs-3.10.0-1160.119.1.el7.x86_64.img 3.10.0-1160.119.1.el7.x86_64

[root@syslog ~]# lsinitrd /boot/initramfs-3.10.0-1160.119.1.el7.x86_64.img | grep -E 'mdraid|raid1|xfs|ext4|ext3'

drwxr-xr-x 2 root root 0 Sep 17 2013 usr/lib/modules/3.10.0-1160.119.1.el7.x86_64/kernel/fs/xfs

-rw-r--r-- 1 root root 348176 Jun 4 2024 usr/lib/modules/3.10.0-1160.119.1.el7.x86_64/kernel/fs/xfs/xfs.ko.xz

-rwxr-xr-x 1 root root 433 Oct 1 2020 usr/sbin/fsck.xfs

-rwxr-xr-x 1 root root 590208 Sep 17 2013 usr/sbin/xfs_db

-rwxr-xr-x 1 root root 747 Oct 1 2020 usr/sbin/xfs_metadump

-rwxr-xr-x 1 root root 576720 Sep 17 2013 usr/sbin/xfs_repair

修复完成后重新启动系统,从CentOS Linux(3.10.8-1160.119.1.e17.x86 64)7 (Core)菜单进入

# 此时正常进入系统,再次查看img没有了之前写入相关md1的配置

[root@syslog ~]# lsinitrd /boot/initramfs-$(uname -r).img | grep -E 'mdraid|raid1|xfs|ext4|ext3'

drwxr-xr-x 2 root root 0 Sep 17 2013 usr/lib/modules/3.10.0-1160.119.1.el7.x86_64/kernel/fs/xfs

-rw-r--r-- 1 root root 348176 Jun 4 2024 usr/lib/modules/3.10.0-1160.119.1.el7.x86_64/kernel/fs/xfs/xfs.ko.xz

-rwxr-xr-x 1 root root 433 Oct 1 2020 usr/sbin/fsck.xfs

-rwxr-xr-x 1 root root 590208 Sep 17 2013 usr/sbin/xfs_db

-rwxr-xr-x 1 root root 747 Oct 1 2020 usr/sbin/xfs_metadump

-rwxr-xr-x 1 root root 576720 Sep 17 2013 usr/sbin/xfs_repair

此时已无之前配置的mdraid相关参数,如下为之前配置的数据信息

[root@syslog ~]# lsinitrd /boot/initramfs-$(uname -r).img | grep -E 'mdraid|raid1|xfs|ext4|ext3' Arguments: --mdadmconf --fstab --add 'mdraid' --filesystems 'xfs ext4 ext3' --add-drivers 'raid1' --force -M mdraid -rwxr-xr-x 1 root root 265 Sep 12 2013 usr/lib/dracut/hooks/cleanup/99-mdraid-needshutdown.sh -rwxr-xr-x 1 root root 910 Sep 12 2013 usr/lib/dracut/hooks/pre-mount/10-mdraid-waitclean.sh -rw-r--r-- 1 root root 22232 Jun 4 2024 usr/lib/modules/3.10.0-1160.119.1.el7.x86_64/kernel/drivers/md/raid1.ko.xz drwxr-xr-x 2 root root 0 Apr 9 13:57 usr/lib/modules/3.10.0-1160.119.1.el7.x86_64/kernel/fs/ext4 -rw-r--r-- 1 root root 224256 Jun 4 2024 usr/lib/modules/3.10.0-1160.119.1.el7.x86_64/kernel/fs/ext4/ext4.ko.xz drwxr-xr-x 2 root root 0 Apr 9 13:57 usr/lib/modules/3.10.0-1160.119.1.el7.x86_64/kernel/fs/xfs -rw-r--r-- 1 root root 348176 Jun 4 2024 usr/lib/modules/3.10.0-1160.119.1.el7.x86_64/kernel/fs/xfs/xfs.ko.xz -rwxr-xr-x 3 root root 0 Apr 9 13:57 usr/sbin/fsck.ext3 -rwxr-xr-x 3 root root 256560 Apr 9 13:57 usr/sbin/fsck.ext4 -rwxr-xr-x 1 root root 433 Oct 1 2020 usr/sbin/fsck.xfs -rwxr-xr-x 1 root root 708 Sep 12 2013 usr/sbin/mdraid-cleanup -rwxr-xr-x 1 root root 1951 Sep 30 2020 usr/sbin/mdraid_start -rwxr-xr-x 1 root root 590208 Apr 9 13:57 usr/sbin/xfs_db -rwxr-xr-x 1 root root 747 Oct 1 2020 usr/sbin/xfs_metadump -rwxr-xr-x 1 root root 576720 Apr 9 13:57 usr/sbin/xfs_repair

查看软RAID挂载配置

[root@syslog ~]# cat /etc/fstab | grep md1

/dev/md1 /data xfs defaults 0 0

[root@syslog ~]# cat /etc/mdadm.conf

ARRAY /dev/md1 metadata=1.2 name=syslog:1 UUID=2b810998:9c4d6da4:cfac8aa3:2c176956

经过多次重启测试,每次都可以自动挂载

/dev/md1到/data目录下,并无挂载失效问题,所以前面的CentOS7更新initramfs命令可不执行,只确定/etc/fatab和/etc/mdadm.conf的配置正常即可

3. 软RAID故障处理

确定硬盘是否故障

# 查看软raid状态

[root@testhoteles ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Wed May 8 06:11:58 2024

Raid Level : raid1

Array Size : 488253440 (465.63 GiB 499.97 GB)

Used Dev Size : 488253440 (465.63 GiB 499.97 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Intent Bitmap : Internal

Update Time : Tue Jun 17 13:30:50 2025

State : active, degraded

Active Devices : 1

Working Devices : 1

Failed Devices : 1

Spare Devices : 0

Consistency Policy : bitmap

Name : testhoteles.hs.com:1 (local to host testhoteles.hs.com)

UUID : 611ac412:b50551be:6cf89141:7d2d4580

Events : 2033547

Number Major Minor RaidDevice State

- 0 0 0 removed

1 8 17 1 active sync /dev/sdb1

2 8 1 - faulty /dev/sda1

# 对故障的硬盘进行查看,由此可见已经可能故障

[root@testhoteles ~]# smartctl -a /dev/sda

smartctl 7.0 2018-12-30 r4883 [x86_64-linux-3.10.0-1160.118.1.el7.x86_64] (local build)

Copyright (C) 2002-18, Bruce Allen, Christian Franke, www.smartmontools.org

Short INQUIRY response, skip product id

A mandatory SMART command failed: exiting. To continue, add one or more '-T permissive' options.

# 对正常的硬盘进行查看

[root@testhoteles ~]# smartctl -a /dev/sda

smartctl 7.0 2018-12-30 r4883 [x86_64-linux-3.10.0-1160.118.1.el7.x86_64] (local build)

Copyright (C) 2002-18, Bruce Allen, Christian Franke, www.smartmontools.org

Short INQUIRY response, skip product id

A mandatory SMART command failed: exiting. To continue, add one or more '-T permissive' options.

[root@testhoteles ~]# smartctl -a /dev/sdb

smartctl 7.0 2018-12-30 r4883 [x86_64-linux-3.10.0-1160.118.1.el7.x86_64] (local build)

Copyright (C) 2002-18, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Model Family: Seagate Barracuda 7200.14 (AF)

Device Model: ST500DM002-1BD142

Serial Number: Z3TQ7THR

LU WWN Device Id: 5 000c50 064a40353

Firmware Version: KC47

User Capacity: 500,107,862,016 bytes [500 GB]

Sector Sizes: 512 bytes logical, 4096 bytes physical

Rotation Rate: 7200 rpm

Device is: In smartctl database [for details use: -P show]

ATA Version is: ATA8-ACS T13/1699-D revision 4

SATA Version is: SATA 3.0, 6.0 Gb/s (current: 6.0 Gb/s)

Local Time is: Tue Jun 17 13:31:17 2025 CST

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

=== START OF READ SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

General SMART Values:

Offline data collection status: (0x82) Offline data collection activity

was completed without error.

Auto Offline Data Collection: Enabled.

Self-test execution status: ( 0) The previous self-test routine completed

without error or no self-test has ever

been run.

Total time to complete Offline

data collection: ( 600) seconds.

Offline data collection

capabilities: (0x7b) SMART execute Offline immediate.

Auto Offline data collection on/off support.

Suspend Offline collection upon new

command.

Offline surface scan supported.

Self-test supported.

Conveyance Self-test supported.

Selective Self-test supported.

SMART capabilities: (0x0003) Saves SMART data before entering

power-saving mode.

Supports SMART auto save timer.

Error logging capability: (0x01) Error logging supported.

General Purpose Logging supported.

Short self-test routine

recommended polling time: ( 1) minutes.

Extended self-test routine

recommended polling time: ( 78) minutes.

Conveyance self-test routine

recommended polling time: ( 2) minutes.

SCT capabilities: (0x303f) SCT Status supported.

SCT Error Recovery Control supported.

SCT Feature Control supported.

SCT Data Table supported.

SMART Attributes Data Structure revision number: 10

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000f 102 099 006 Pre-fail Always - 23328

3 Spin_Up_Time 0x0003 100 097 000 Pre-fail Always - 0

4 Start_Stop_Count 0x0032 093 093 020 Old_age Always - 8147

5 Reallocated_Sector_Ct 0x0033 100 100 036 Pre-fail Always - 0

7 Seek_Error_Rate 0x000f 081 060 030 Pre-fail Always - 147092174

9 Power_On_Hours 0x0032 058 058 000 Old_age Always - 37450

10 Spin_Retry_Count 0x0013 100 100 097 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 099 099 020 Old_age Always - 1693

183 Runtime_Bad_Block 0x0032 100 100 000 Old_age Always - 0

184 End-to-End_Error 0x0032 100 100 099 Old_age Always - 0

187 Reported_Uncorrect 0x0032 099 099 000 Old_age Always - 1

188 Command_Timeout 0x0032 100 098 000 Old_age Always - 5 5 5

189 High_Fly_Writes 0x003a 100 100 000 Old_age Always - 0

190 Airflow_Temperature_Cel 0x0022 055 048 045 Old_age Always - 45 (Min/Max 21/49)

194 Temperature_Celsius 0x0022 045 052 000 Old_age Always - 45 (0 4 0 0 0)

195 Hardware_ECC_Recovered 0x001a 048 023 000 Old_age Always - 23328

197 Current_Pending_Sector 0x0012 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0010 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x003e 200 200 000 Old_age Always - 0

240 Head_Flying_Hours 0x0000 100 253 000 Old_age Offline - 35085h+52m+51.078s

241 Total_LBAs_Written 0x0000 100 253 000 Old_age Offline - 686882885

242 Total_LBAs_Read 0x0000 100 253 000 Old_age Offline - 1844199465

SMART Error Log Version: 1

No Errors Logged

SMART Self-test log structure revision number 1

Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error

# 1 Short offline Completed without error 00% 0 -

SMART Selective self-test log data structure revision number 1

SPAN MIN_LBA MAX_LBA CURRENT_TEST_STATUS

1 0 0 Not_testing

2 0 0 Not_testing

3 0 0 Not_testing

4 0 0 Not_testing

5 0 0 Not_testing

Selective self-test flags (0x0):

After scanning selected spans, do NOT read-scan remainder of disk.

If Selective self-test is pending on power-up, resume after 0 minute delay.

# 查看日志截图sda磁盘是否有故障

[root@testhoteles ~]# dmesg | grep -i sda | tail -n 20

[30422350.262969] sd 0:0:0:0: [sda] tag#1 FAILED Result: hostbyte=DID_BAD_TARGET driverbyte=DRIVER_OK cmd_age=0s

[30422350.262977] sd 0:0:0:0: [sda] tag#1 CDB: ATA command pass through(16) 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

[30422350.263274] sd 0:0:0:0: [sda] tag#2 FAILED Result: hostbyte=DID_BAD_TARGET driverbyte=DRIVER_OK cmd_age=0s

[30422350.263281] sd 0:0:0:0: [sda] tag#2 CDB: ATA command pass through(16) 85 06 2c 00 da 00 00 00 00 00 4f 00 c2 00 b0 00

[30424149.433114] sd 0:0:0:0: [sda] tag#5 FAILED Result: hostbyte=DID_BAD_TARGET driverbyte=DRIVER_OK cmd_age=0s

[30424149.433121] sd 0:0:0:0: [sda] tag#5 CDB: ATA command pass through(16) 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

[30425949.742854] sd 0:0:0:0: [sda] tag#7 FAILED Result: hostbyte=DID_BAD_TARGET driverbyte=DRIVER_OK cmd_age=0s

[30425949.742862] sd 0:0:0:0: [sda] tag#7 CDB: ATA command pass through(16) 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

[30427750.047628] sd 0:0:0:0: [sda] tag#9 FAILED Result: hostbyte=DID_BAD_TARGET driverbyte=DRIVER_OK cmd_age=0s

[30427750.047637] sd 0:0:0:0: [sda] tag#9 CDB: ATA command pass through(16) 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

[30429550.214047] sd 0:0:0:0: [sda] tag#11 FAILED Result: hostbyte=DID_BAD_TARGET driverbyte=DRIVER_OK cmd_age=0s

[30429550.214055] sd 0:0:0:0: [sda] tag#11 CDB: ATA command pass through(16) 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

[30431350.386420] sd 0:0:0:0: [sda] tag#13 FAILED Result: hostbyte=DID_BAD_TARGET driverbyte=DRIVER_OK cmd_age=0s

[30431350.386429] sd 0:0:0:0: [sda] tag#13 CDB: ATA command pass through(16) 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

[30433149.616538] sd 0:0:0:0: [sda] tag#15 FAILED Result: hostbyte=DID_BAD_TARGET driverbyte=DRIVER_OK cmd_age=0s

[30433149.616543] sd 0:0:0:0: [sda] tag#15 CDB: ATA command pass through(16) 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

[30434949.845775] sd 0:0:0:0: [sda] tag#17 FAILED Result: hostbyte=DID_BAD_TARGET driverbyte=DRIVER_OK cmd_age=0s

[30434949.845783] sd 0:0:0:0: [sda] tag#17 CDB: ATA command pass through(16) 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

[30436750.032715] sd 0:0:0:0: [sda] tag#3 FAILED Result: hostbyte=DID_BAD_TARGET driverbyte=DRIVER_OK cmd_age=0s

[30436750.032722] sd 0:0:0:0: [sda] tag#3 CDB: ATA command pass through(16) 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

[root@testhoteles ~]# journalctl -k | grep -i sda | tail -n 20

6月 17 09:27:54 testhoteles.hs.com kernel: sd 0:0:0:0: [sda] tag#1 FAILED Result: hostbyte=DID_BAD_TARGET driverbyte=DRIVER_OK cmd_age=0s

6月 17 09:27:54 testhoteles.hs.com kernel: sd 0:0:0:0: [sda] tag#1 CDB: ATA command pass through(16) 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

6月 17 09:27:54 testhoteles.hs.com kernel: sd 0:0:0:0: [sda] tag#2 FAILED Result: hostbyte=DID_BAD_TARGET driverbyte=DRIVER_OK cmd_age=0s

6月 17 09:27:54 testhoteles.hs.com kernel: sd 0:0:0:0: [sda] tag#2 CDB: ATA command pass through(16) 85 06 2c 00 da 00 00 00 00 00 4f 00 c2 00 b0 00

6月 17 09:57:54 testhoteles.hs.com kernel: sd 0:0:0:0: [sda] tag#5 FAILED Result: hostbyte=DID_BAD_TARGET driverbyte=DRIVER_OK cmd_age=0s

6月 17 09:57:54 testhoteles.hs.com kernel: sd 0:0:0:0: [sda] tag#5 CDB: ATA command pass through(16) 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

6月 17 10:27:54 testhoteles.hs.com kernel: sd 0:0:0:0: [sda] tag#7 FAILED Result: hostbyte=DID_BAD_TARGET driverbyte=DRIVER_OK cmd_age=0s

6月 17 10:27:54 testhoteles.hs.com kernel: sd 0:0:0:0: [sda] tag#7 CDB: ATA command pass through(16) 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

6月 17 10:57:54 testhoteles.hs.com kernel: sd 0:0:0:0: [sda] tag#9 FAILED Result: hostbyte=DID_BAD_TARGET driverbyte=DRIVER_OK cmd_age=0s

6月 17 10:57:54 testhoteles.hs.com kernel: sd 0:0:0:0: [sda] tag#9 CDB: ATA command pass through(16) 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

6月 17 11:27:54 testhoteles.hs.com kernel: sd 0:0:0:0: [sda] tag#11 FAILED Result: hostbyte=DID_BAD_TARGET driverbyte=DRIVER_OK cmd_age=0s

6月 17 11:27:54 testhoteles.hs.com kernel: sd 0:0:0:0: [sda] tag#11 CDB: ATA command pass through(16) 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

6月 17 11:57:54 testhoteles.hs.com kernel: sd 0:0:0:0: [sda] tag#13 FAILED Result: hostbyte=DID_BAD_TARGET driverbyte=DRIVER_OK cmd_age=0s

6月 17 11:57:54 testhoteles.hs.com kernel: sd 0:0:0:0: [sda] tag#13 CDB: ATA command pass through(16) 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

6月 17 12:27:54 testhoteles.hs.com kernel: sd 0:0:0:0: [sda] tag#15 FAILED Result: hostbyte=DID_BAD_TARGET driverbyte=DRIVER_OK cmd_age=0s

6月 17 12:27:54 testhoteles.hs.com kernel: sd 0:0:0:0: [sda] tag#15 CDB: ATA command pass through(16) 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

6月 17 12:57:54 testhoteles.hs.com kernel: sd 0:0:0:0: [sda] tag#17 FAILED Result: hostbyte=DID_BAD_TARGET driverbyte=DRIVER_OK cmd_age=0s

6月 17 12:57:54 testhoteles.hs.com kernel: sd 0:0:0:0: [sda] tag#17 CDB: ATA command pass through(16) 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

6月 17 13:27:54 testhoteles.hs.com kernel: sd 0:0:0:0: [sda] tag#3 FAILED Result: hostbyte=DID_BAD_TARGET driverbyte=DRIVER_OK cmd_age=0s

6月 17 13:27:54 testhoteles.hs.com kernel: sd 0:0:0:0: [sda] tag#3 CDB: ATA command pass through(16) 85 06 2c 00 00 00 00 00 00 00 00 00 00 00 e5 00

# 查看/dev/sda磁盘是否有响应,直接从硬盘获取详细/当前信息

[root@testhoteles ~]# hdparm -I /dev/sda

/dev/sda:

HDIO_DRIVE_CMD(identify) failed: Input/output error

# 查看/dev/sdb磁盘是否有响应,直接从硬盘获取详细/当前信息

[root@testhoteles ~]# hdparm -I /dev/sdb

/dev/sdb:

ATA device, with non-removable media

Model Number: ST500DM002-1BD142

Serial Number: Z3TQ7THR

Firmware Revision: KC47

Transport: Serial, SATA Rev 3.0

Standards:

Used: unknown (minor revision code 0x0029)

Supported: 8 7 6 5

Likely used: 8

Configuration:

Logical max current

cylinders 16383 16383

heads 16 16

sectors/track 63 63

--

CHS current addressable sectors: 16514064

LBA user addressable sectors: 268435455

LBA48 user addressable sectors: 976773168

Logical Sector size: 512 bytes

Physical Sector size: 4096 bytes

Logical Sector-0 offset: 0 bytes

device size with M = 1024*1024: 476940 MBytes

device size with M = 1000*1000: 500107 MBytes (500 GB)

cache/buffer size = 16384 KBytes

Nominal Media Rotation Rate: 7200

Capabilities:

LBA, IORDY(can be disabled)

Queue depth: 32

Standby timer values: spec'd by Standard, no device specific minimum

R/W multiple sector transfer: Max = 16 Current = 16

Recommended acoustic management value: 208, current value: 208

DMA: mdma0 mdma1 mdma2 udma0 udma1 udma2 udma3 udma4 udma5 *udma6

Cycle time: min=120ns recommended=120ns

PIO: pio0 pio1 pio2 pio3 pio4

Cycle time: no flow control=120ns IORDY flow control=120ns

Commands/features:

Enabled Supported:

* SMART feature set

Security Mode feature set

* Power Management feature set

* Write cache

* Look-ahead

* Host Protected Area feature set

* WRITE_BUFFER command

* READ_BUFFER command

* DOWNLOAD_MICROCODE

Power-Up In Standby feature set

* SET_FEATURES required to spinup after power up

SET_MAX security extension

* Automatic Acoustic Management feature set

* 48-bit Address feature set

* Device Configuration Overlay feature set

* Mandatory FLUSH_CACHE

* FLUSH_CACHE_EXT

* SMART error logging

* SMART self-test

* General Purpose Logging feature set

* WRITE_{DMA|MULTIPLE}_FUA_EXT

* 64-bit World wide name

Write-Read-Verify feature set

* WRITE_UNCORRECTABLE_EXT command

* {READ,WRITE}_DMA_EXT_GPL commands

* Segmented DOWNLOAD_MICROCODE

* Gen1 signaling speed (1.5Gb/s)

* Gen2 signaling speed (3.0Gb/s)

* Gen3 signaling speed (6.0Gb/s)

* Native Command Queueing (NCQ)

* Phy event counters

* unknown 76[15]

Device-initiated interface power management

* Software settings preservation

* SMART Command Transport (SCT) feature set

* SCT Read/Write Long (AC1), obsolete

* SCT Write Same (AC2)

* SCT Error Recovery Control (AC3)

* SCT Features Control (AC4)

* SCT Data Tables (AC5)

unknown 206[12] (vendor specific)

unknown 206[13] (vendor specific)

Security:

Master password revision code = 65534

supported

not enabled

not locked

frozen

not expired: security count

supported: enhanced erase

76min for SECURITY ERASE UNIT. 76min for ENHANCED SECURITY ERASE UNIT.

Logical Unit WWN Device Identifier: 5000c50064a40353

NAA : 5

IEEE OUI : 000c50

Unique ID : 064a40353

Checksum: correct

可通过好的硬盘编号来反向推断坏的硬盘,以下编号的硬盘是好的,也就是除开以下编号的硬盘是坏的

Serial Number: Z3TQ7THR LU WWN Device Id: 5 000c50 064a40353

更改换好新硬盘后,添加新硬盘到软raid组中

# 添加新硬盘

[root@testhoteles ~]# mdadm /dev/md1 --add /dev/sda1

mdadm: added /dev/sda1

# 查看状态,此时正在重建中

[root@testhoteles ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Wed May 8 06:11:58 2024

Raid Level : raid1

Array Size : 488253440 (465.63 GiB 499.97 GB)

Used Dev Size : 488253440 (465.63 GiB 499.97 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Intent Bitmap : Internal

Update Time : Tue Jun 17 13:46:13 2025

State : active, degraded, recovering

Active Devices : 1

Working Devices : 2

Failed Devices : 0

Spare Devices : 1

Consistency Policy : bitmap

Rebuild Status : 0% complete

Name : testhoteles.hs.com:1 (local to host testhoteles.hs.com)

UUID : 611ac412:b50551be:6cf89141:7d2d4580

Events : 2033885

Number Major Minor RaidDevice State

2 8 1 0 spare rebuilding /dev/sda1

1 8 17 1 active sync /dev/sdb1

# 正在重建中

[root@testhoteles ~]# cat /proc/mdstat

Personalities : [raid1]

md1 : active raid1 sda1[2] sdb1[1]

488253440 blocks super 1.2 [2/1] [_U]

[>....................] recovery = 0.0% (38016/488253440) finish=7794.8min speed=1043K/sec

bitmap: 3/4 pages [12KB], 65536KB chunk

unused devices: <none>

4. LVM VG同名冲突问题

新机器:安装CentOS 7操作系统,有/dev/centos/root和/dev/centos/swap LV,VG名称为centos

旧机器:也是CentOS 7操作系统有/dev/centos/root和/dev/centos/swap LV,VG名称为centos

因为旧机器有坏道,elasticsearch7服务读取索引报错,无法继续使用,所以需要重新更换新磁盘,将此旧磁盘数据迁移到新硬盘之上

问题:新机器和旧机器都有VG名称为centos,该如何迁移数据

4.1 当前LVM信息

PV信息

[root@syslog ~]# pvdisplay

--- Physical volume ---

PV Name /dev/sda2

VG Name centos

PV Size 223.08 GiB / not usable 3.00 MiB

Allocatable yes (but full)

PE Size 4.00 MiB

Total PE 57108

Free PE 0

Allocated PE 57108

PV UUID pUfIZ0-Fmwd-Y3IT-E794-ZNZa-RfkU-wtFeTE

--- Physical volume ---

PV Name /dev/sdd2

VG Name centos

PV Size <1.82 TiB / not usable 4.00 MiB

Allocatable yes (but full)

PE Size 4.00 MiB

Total PE 476806

Free PE 0

Allocated PE 476806

PV UUID ZAsYXD-fdeV-AGoV-oP0P-BmiK-yZ7p-dgPTp2

VG信息

[root@syslog ~]# vgdisplay

--- Volume group ---

VG Name centos

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 7

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 2

Open LV 2

Max PV 0

Cur PV 1

Act PV 1

VG Size <223.08 GiB

PE Size 4.00 MiB

Total PE 57108

Alloc PE / Size 57108 / <223.08 GiB

Free PE / Size 0 / 0

VG UUID 0kZqzY-CU3x-WO5g-Yhsu-WPsV-34O8-beg0Fc

--- Volume group ---

VG Name centos

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 7

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 2

Open LV 0

Max PV 0

Cur PV 1

Act PV 1

VG Size <1.82 TiB

PE Size 4.00 MiB

Total PE 476806

Alloc PE / Size 476806 / <1.82 TiB

Free PE / Size 0 / 0

VG UUID b8uQE2-lSBQ-pszt-jYwa-XX9n-KS0z-wLGdqS

LV信息

[root@syslog ~]# lvdisplay

--- Logical volume ---

LV Path /dev/centos/swap

LV Name swap

VG Name centos

LV UUID n0pO4Z-Htyg-O0Tt-Fb0P-ccnN-QnOB-9Y1Vl5

LV Write Access read/write

LV Creation host, time localhost.localdomain, 2025-04-07 17:23:21 +0800

LV Status available

# open 2

LV Size 7.81 GiB

Current LE 2000

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:1

--- Logical volume ---

LV Path /dev/centos/root

LV Name root

VG Name centos

LV UUID ofjacO-gWSX-2Yif-05ic-0bcy-fAVg-S2eSLn

LV Write Access read/write

LV Creation host, time localhost.localdomain, 2025-04-07 17:23:22 +0800

LV Status available

# open 1

LV Size <215.27 GiB

Current LE 55108

Segments 2

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:0

--- Logical volume ---

LV Path /dev/centos/swap

LV Name swap

VG Name centos

LV UUID WJ7f1U-BCz1-LOZg-djAZ-GvIR-t06P-3F4KKD

LV Write Access read/write

LV Creation host, time syslog, 2024-09-23 14:38:28 +0800

LV Status NOT available

LV Size 7.81 GiB

Current LE 2000

Segments 1

Allocation inherit

Read ahead sectors auto

--- Logical volume ---

LV Path /dev/centos/root

LV Name root

VG Name centos

LV UUID waqOcy-ettH-58z1-WogT-GgDY-v97j-fTij7p

LV Write Access read/write

LV Creation host, time syslog, 2024-09-23 14:38:32 +0800

LV Status NOT available

LV Size 1.81 TiB

Current LE 474806

Segments 2

Allocation inherit

Read ahead sectors auto

4.2 识别冲突的VG信息

[root@syslog ~]# pvs

PV VG Fmt Attr PSize PFree

/dev/sda2 centos lvm2 a-- <223.08g 0

/dev/sdd2 centos lvm2 a-- <1.82t 0

[root@syslog ~]# vgs -v

Cache: Duplicate VG name centos: Prefer existing 0kZqzY-CU3x-WO5g-Yhsu-WPsV-34O8-beg0Fc vs new b8uQE2-lSBQ-pszt-jYwa-XX9n-KS0z-wLGdqS

Cache: Duplicate VG name centos: Prefer existing b8uQE2-lSBQ-pszt-jYwa-XX9n-KS0z-wLGdqS vs new 0kZqzY-CU3x-WO5g-Yhsu-WPsV-34O8-beg0Fc

Archiving volume group "centos" metadata (seqno 7).

Archiving volume group "centos" metadata (seqno 7).

Creating volume group backup "/etc/lvm/backup/centos" (seqno 7).

Cache: Duplicate VG name centos: Prefer existing 0kZqzY-CU3x-WO5g-Yhsu-WPsV-34O8-beg0Fc vs new b8uQE2-lSBQ-pszt-jYwa-XX9n-KS0z-wLGdqS

Archiving volume group "centos" metadata (seqno 7).

Archiving volume group "centos" metadata (seqno 7).

Creating volume group backup "/etc/lvm/backup/centos" (seqno 7).

VG Attr Ext #PV #LV #SN VSize VFree VG UUID VProfile

centos wz--n- 4.00m 1 2 0 <223.08g 0 0kZqzY-CU3x-WO5g-Yhsu-WPsV-34O8-beg0Fc

centos wz--n- 4.00m 1 2 0 <1.82t 0 b8uQE2-lSBQ-pszt-jYwa-XX9n-KS0z-wLGdqS

4.3 根据UUID重命名VG(例如将原VG名centos改为centos_old)

[root@syslog ~]# vgrename b8uQE2-lSBQ-pszt-jYwa-XX9n-KS0z-wLGdqS centos_old

Processing VG centos because of matching UUID b8uQE2-lSBQ-pszt-jYwa-XX9n-KS0z-wLGdqS

Volume group "b8uQE2-lSBQ-pszt-jYwa-XX9n-KS0z-wLGdqS" successfully renamed to "centos_old"

[root@syslog ~]# vgs -v

Archiving volume group "centos" metadata (seqno 7).

Creating volume group backup "/etc/lvm/backup/centos" (seqno 7).

VG Attr Ext #PV #LV #SN VSize VFree VG UUID VProfile

centos wz--n- 4.00m 1 2 0 <223.08g 0 0kZqzY-CU3x-WO5g-Yhsu-WPsV-34O8-beg0Fc

centos_old wz--n- 4.00m 1 2 0 <1.82t 0 b8uQE2-lSBQ-pszt-jYwa-XX9n-KS0z-wLGdqS

4.4 激活并验证新VG

[root@syslog ~]# vgchange -ay centos_old

2 logical volume(s) in volume group "centos_old" now active

[root@syslog ~]# lvscan

ACTIVE '/dev/centos/swap' [7.81 GiB] inherit

ACTIVE '/dev/centos/root' [<215.27 GiB] inherit

ACTIVE '/dev/centos_old/swap' [7.81 GiB] inherit

ACTIVE '/dev/centos_old/root' [1.81 TiB] inherit

4.5 挂载外部硬盘到本机目录

[root@syslog ~]# mount /dev/centos_old/root /mnt/

[root@syslog ~]# ls /mnt

bin boot data dev download etc home lib lib64 media mnt opt proc root run sbin shell srv sys tmp usr var windows

### elasticsearch和docker数据在此目录

[root@syslog ~]# ls /mnt/data/

docker rsyslog

然后使用

rsync -avrPz SOURCE/* DEST/或者cp -ar命令进行数据迁移-a: archive -v: verbose -r: recursive -P: progress -z: compress SOURCE/*: 同步源目录下所有数据 DEST/:到目标目录之下

如果磁盘有坏道,可以使用dd命令

dd if=/dev/sda of=/dev/sdb bs=64k conv=noerror,sync status=progressconv=noerror,sync: 该参数组合是处理坏道的关键。noerror允许dd在读取错误时继续执行而非终止,sync则用零填充无法读取的块,确保目标设备与源设备的块对齐。